[Survey] MLforHPC Benchmarks

ML For HPC Benchmarks

The screenshot bellow shows the materials related to HPC benchmarks. Although most of them are HPC for AI instead of AI for HPC.

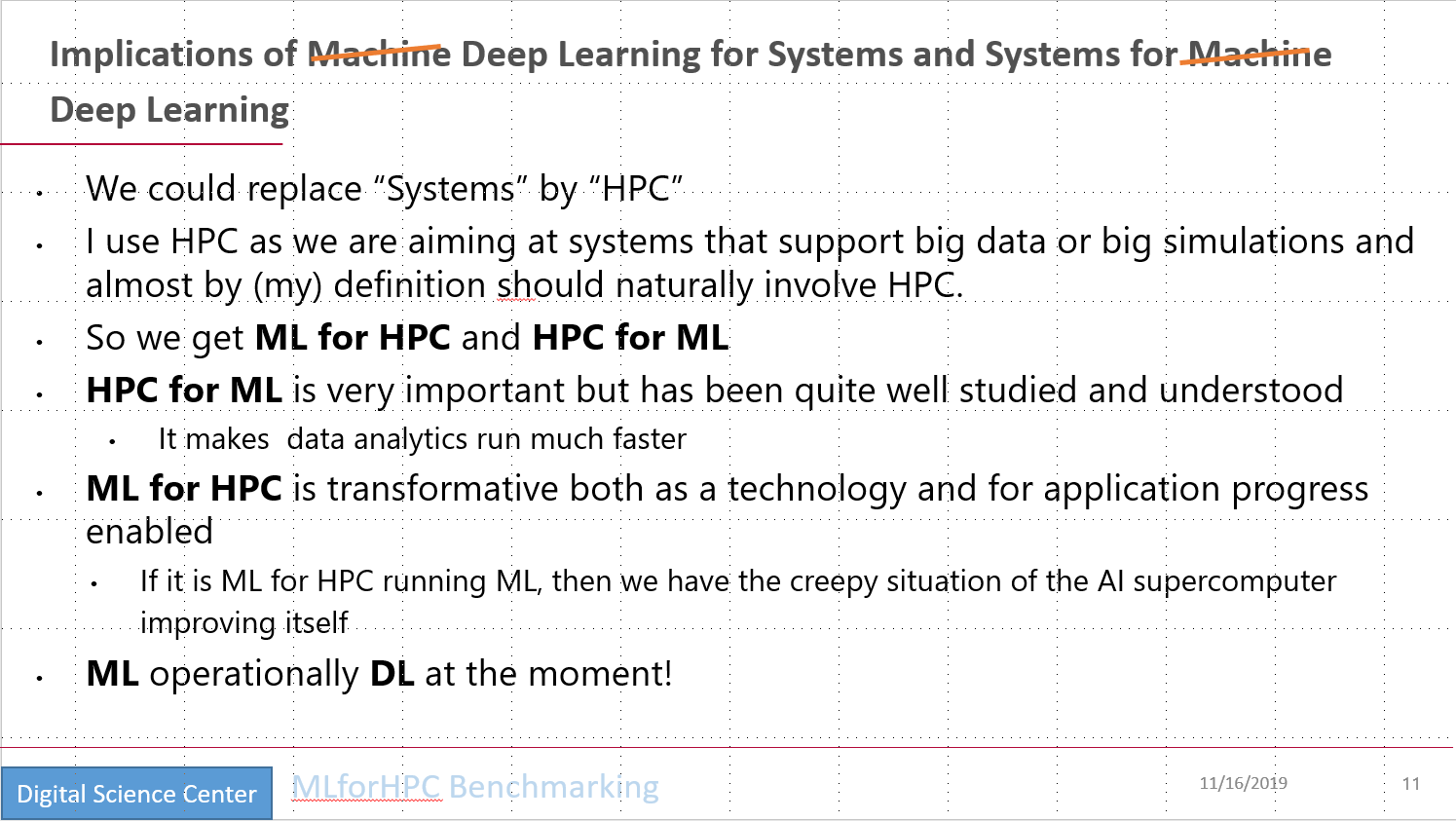

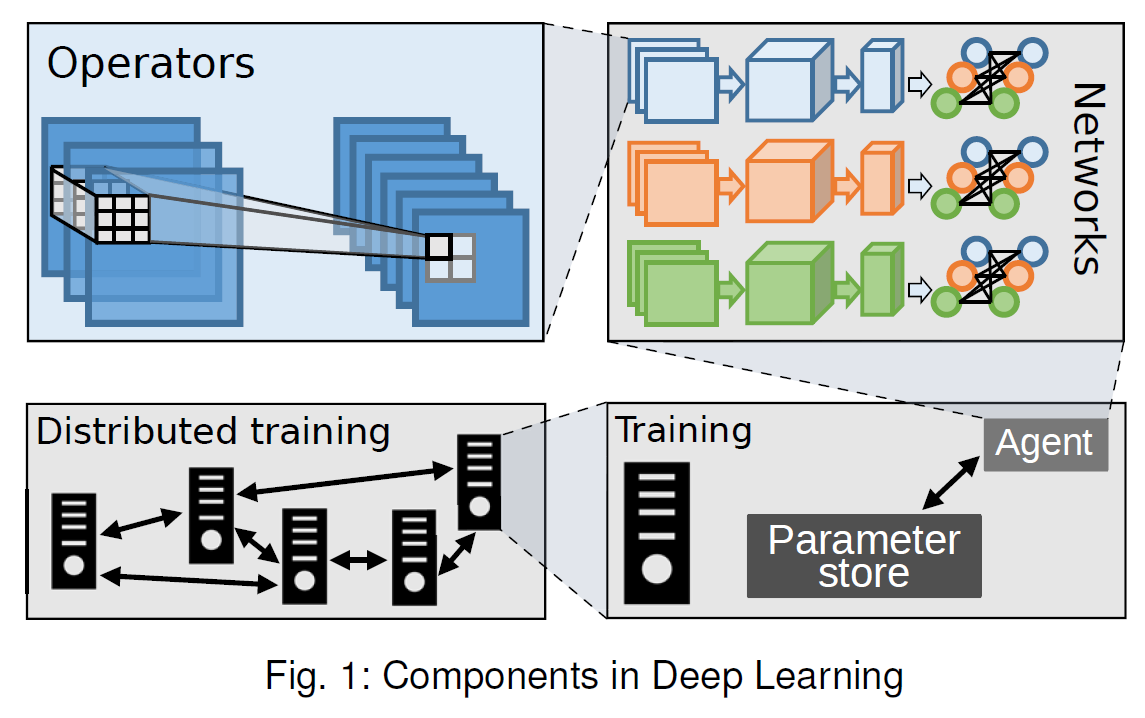

Implications of Integration of Deep Learning and HPC for Benchmarking

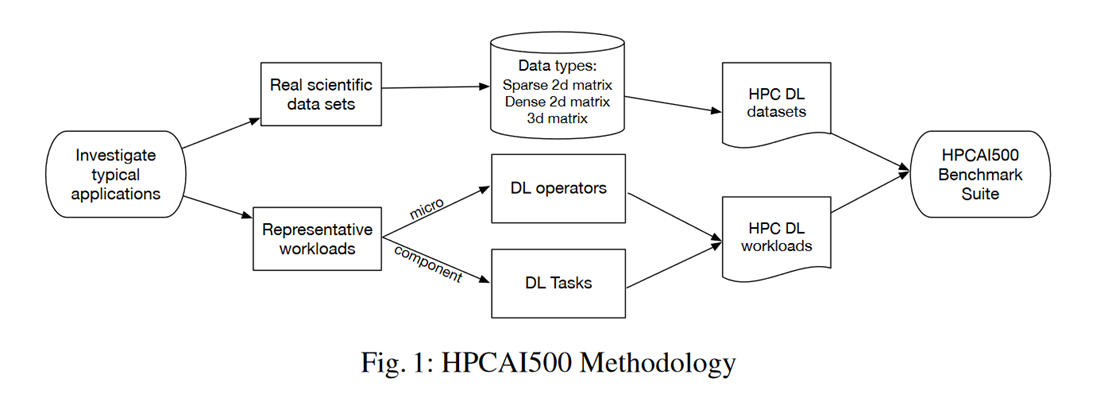

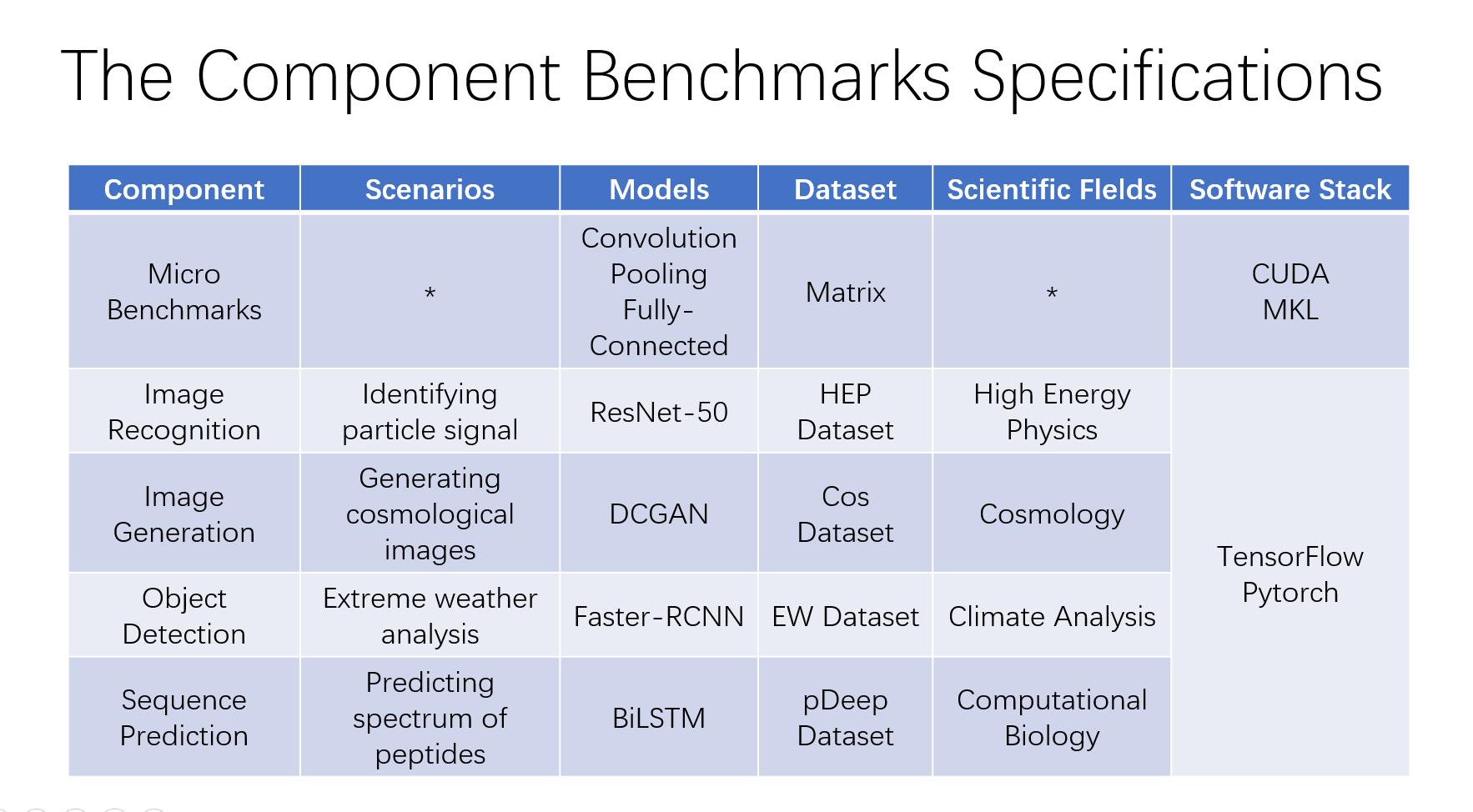

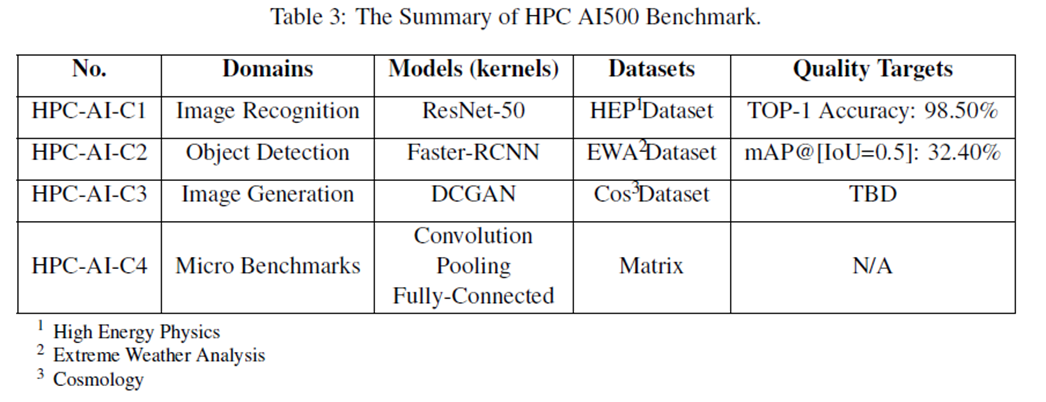

HPC AI500: A Benchmark Suite for HPC AI Systems

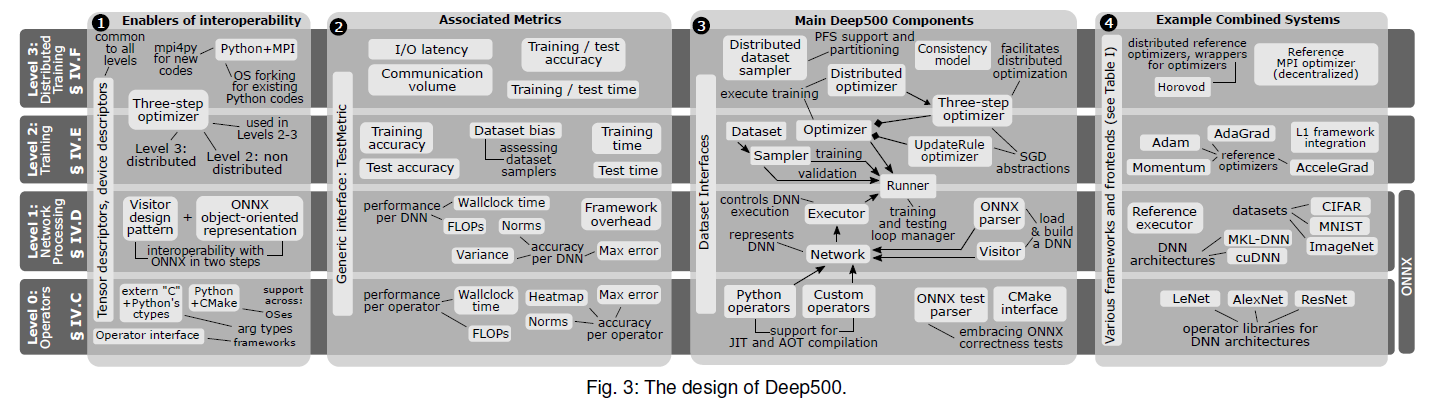

A Modular Benchmarking Infrastructure for High-Performance and Reproducible Deep Learning

This benchmark utilize the Open Neural Network Exchange format to store DNN reproducibly. And almost all frameworks support generate this kind of files. So this benchmark could combine them together.

The essence of Deep500 is its layered and modular design that allows to independently extend and benchmark DL procedures related to simple operators, whole neural networks, training schemes, and distributed training. The principles behind this design can be reused to enable interpretable and reproducible benchmarking of extreme-scale codes in domains outside DL.

Benchmark Challenges

- customizability: the ability to seamlessly and effortlessly combine different features of different frameworks and still be able to provide fair analysis of their performance and accuracy.

- metrics: considering performance, correctness, and convergence in shared- as well as distributed-memory environments.

- some metrics can test the performance of both the whole computation and fine-grained elements, for example latency or overhead.

- others, such as accuracy or bias, assess the quality of a given algorithm, its convergence, and its generalization towards previously-unseen data.

- combine performance and accuracy (time-to-accuracy) to analyze the tradeoffs.

- propose metrics for the distributed part of DL codes: communication volume and I/O latency.

- performance and scalability: the design of distributed benchmarking of DL to ensure high performance and scalability.

- validation: A benchmarking infrastructure for DL must allow to validate results with respect to several criteria. As we discuss in § V, Deep500 offers validation of convergence, correctness, accuracy, and performance.

- reproducibility: the ability to reproduce or at least interpret results.

Design and Implementation of Deep500

The core enabler in Deep500 is the modular design that groups all the required functionalities into four levels:

- “Operators”

- “Network Processing”

- “Training”

- “Distributed Training”

Each level provides relevant abstractions, interfaces, reference implementations, validation procedures, and metrics.