[Glean] All-Reduce Operations

All-Reduce Operations

The all reduce operations are one kind of collective operations in NCCL1 and MPI2 lib.

Many parallel applications will require accessing the reduced results across all processes rather than the root process. In a similar complementary style of

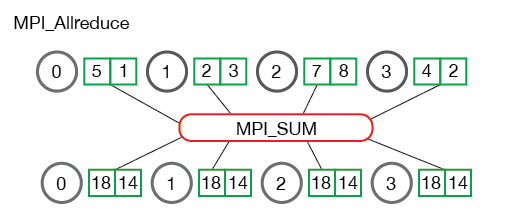

MPI_AllgathertoMPI_Gather,MPI_Allreducewill reduce the values and distribute the results to all processes.

The AllReduce operation is performing reductions on data (for example, sum, max) across devices and writing the result in the receive buffers of every rank.

The AllReduce operation is rank-agnostic. Any reordering of the ranks will not affect the outcome of the operations.

Here are also other collective operations in NCCL:

- Broadcast

- Reduce

- All Gather

- Reduce Scatter