[Glean] Precision Format

Precision Format

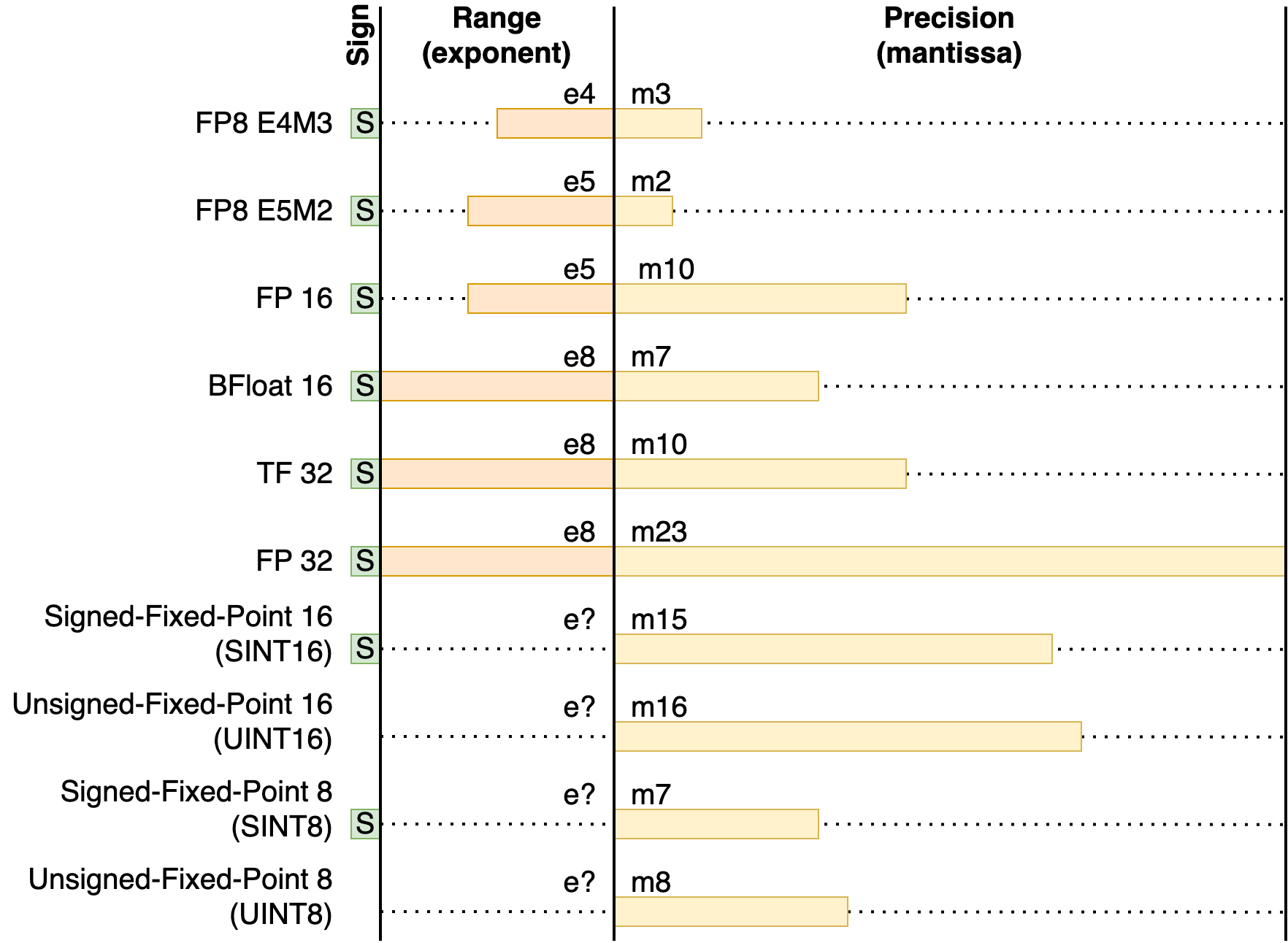

Overview

- Floating-Point Formats

- FP32 (32 bit)

- TF32 (19 bit)

- BF16 (16 bit)

- FP16 (16 bit)

- FP8 (8 bit)

- FP8 E5M2

- FP8 E4M3

- Integer Formats

- INT16 (16 bit)

- SINT16

- UINT16

- INT8 (8 bit)

- SINT8

- UINT8

- INT16 (16 bit)

Floating-Point Formats

FP32 (32 bit)

Floating-Point 32-bit

- 1 bit sign

- 8 bit exponent

- 23 bit mantissa

TF32 (19 bit) 1

TensorFloat 19-bit

TF32 is compatible with both BF16 and FP16, and is more accurate than BF16.

- 1 bit sign

- 8 bit exponent

- 10 bit mantissa

BF16 (16 bit)

BrainFloat 16-bit

- 1 bit sign

- 8 bit exponent

- 7 bit mantissa

FP16 (16 bit)

Floating-Point 16-bit

- 1 bit sign

- 5 bit exponent

- 10 bit mantissa

FP8 (8 bit)

Floating-Point 8-bit

FP8 E5M2

- 1 bit sign

- 5 bit exponent

- 2 bit mantissa

FP8 E4M3

- 1 bit sign

- 4 bit exponent

- 3 bit mantissa

Integer Formats

INT16 (16 bit)

SINT16

Signed Integer 16-bit

- 1 bit sign

- 15 bit mantissa

UINT16

Unsigned Integer 16-bit

- 16 bit mantissa

INT8 (8 bit)

SINT8

Signed Integer 8-bit

- 1 bit sign

- 7 bit mantissa

UINT8

Unsigned Integer 8-bit

- 8 bit mantissa