[Read Paper] TEA-DNN: the Quest for Time-Energy-Accuracy Co-optimized Deep Neural Networks

TEA-DNN the Quest for Time-Energy-Accuracy Co-optimized Deep Neural Networks

Solved problem

- NAS with considering the available hardware resources

- Leverage energy and execution time

- To my understanding:

- Find an optimal CNN structure for one target hardware platform

Assume that classification error is not affected by the specific hardware a network is run on

The main idea and methods

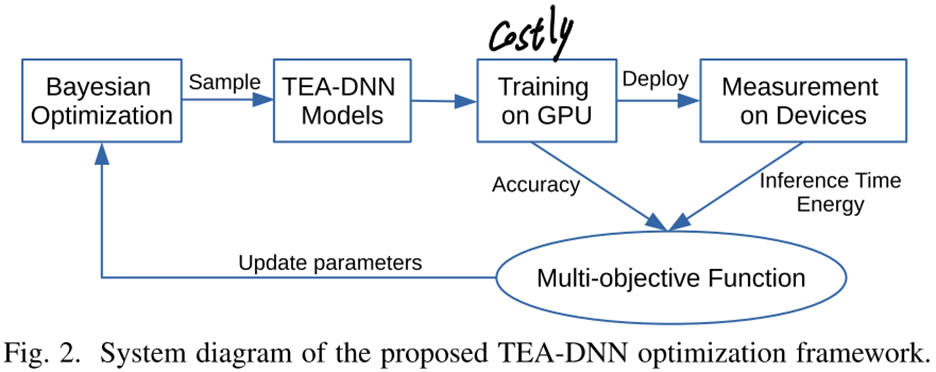

- Formulate the neural architecture search problem as a multi-objective optimization problem

- Leverage Bayesian optimization to search for Pareto-optimal solutions

- Directly measure the real-world values for all the three objectives (i.e., time, energy and accuracy)

- Why: eliminates the need to model the targeted hardware

Background knowledge

- Pareto-optimal models

- Bayesian optimization

TEA-DNN Optimization Framework

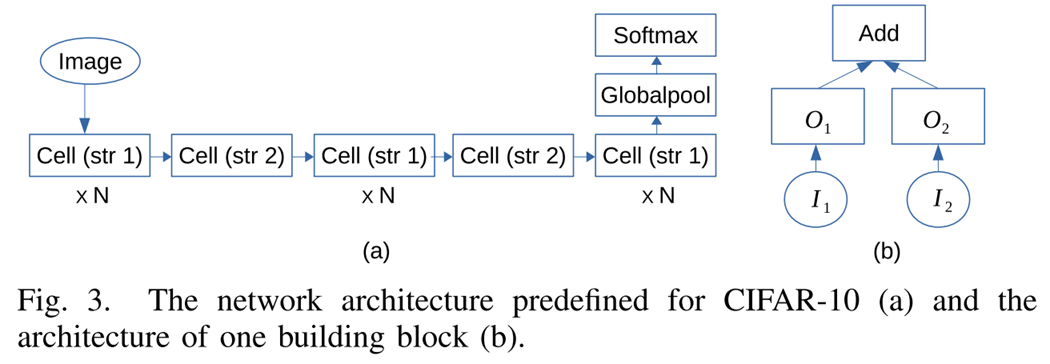

Network Architecture Predefined

The Search Space

- The input space of each building block consists of the outputs of all preceding blocks in the current cell as well as outputs from the two preceding cells

- The operation space includes the following eight functions commonly used in top performing CNNs:

- max 3 × 3: 3 × 3 max pooling

- identity: identity mapping

- sep 3 × 3: 3 × 3 depthwise-separable convolution

- conv 3 × 3: 3 × 3 convolution

- sep 5 × 5: 5 × 5 depthwise-separable convolution

- conv 5 × 5: 5 × 5 convolution

- sep 7 × 7: 7 × 7 depthwise-separable convolution

- conv 7 × 7: 7 × 7 convolution