[Workshop] tinyML Talks: Saving 95% of Your Edge Power with Sparsity

- tinyML Talks - Jon Tapson: Saving 95% of Your Edge Power with Sparsity to Enable tinyML

tinyML Talks - Jon Tapson: Saving 95% of Your Edge Power with Sparsity to Enable tinyML1

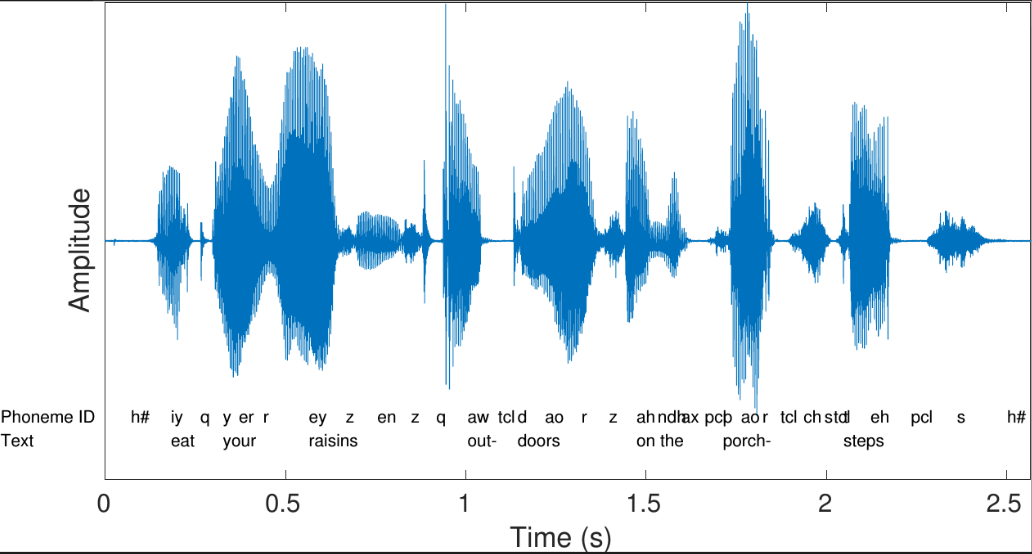

Characteristics of Edge Data Streams

The data rate is much higher than the real information rate

Sensors or systems are idle for long periods or large duty cycles (even during input)

Most inputs have high levels of redundancy

Sparse Compute

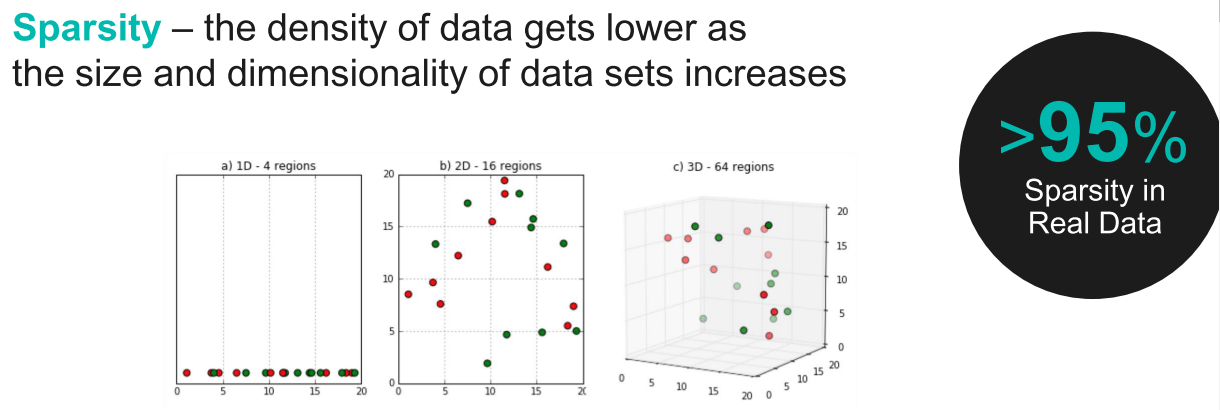

- SPARSITY in SPACE: “Curse of dimensionality” =–as data dimension grows, the proportion of null data points grows exponentially

- SPARSITY in TIME: Real world signals have sparse changes

- SPARSITY in CONNECTIVITY: Compute only graph edges with significant weight (exploit “small world” connectivity)

- SPARSITY in ACTIVATION: Less than 40% of neurons may be activated by an upstream change

Sparsity in Space

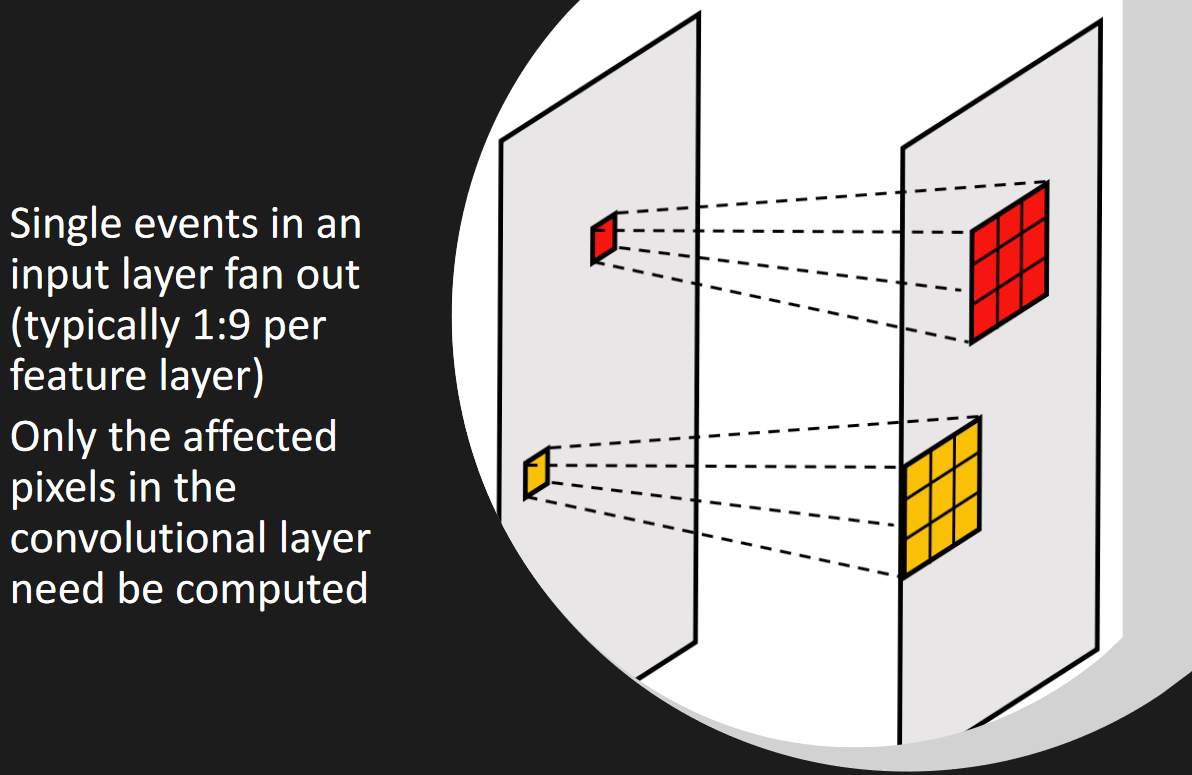

Event Based Convolutions

Single events in an input layer fan out (typically 1:9 per feature layer) Only the affected pixels in the convolutional layer need be computed.

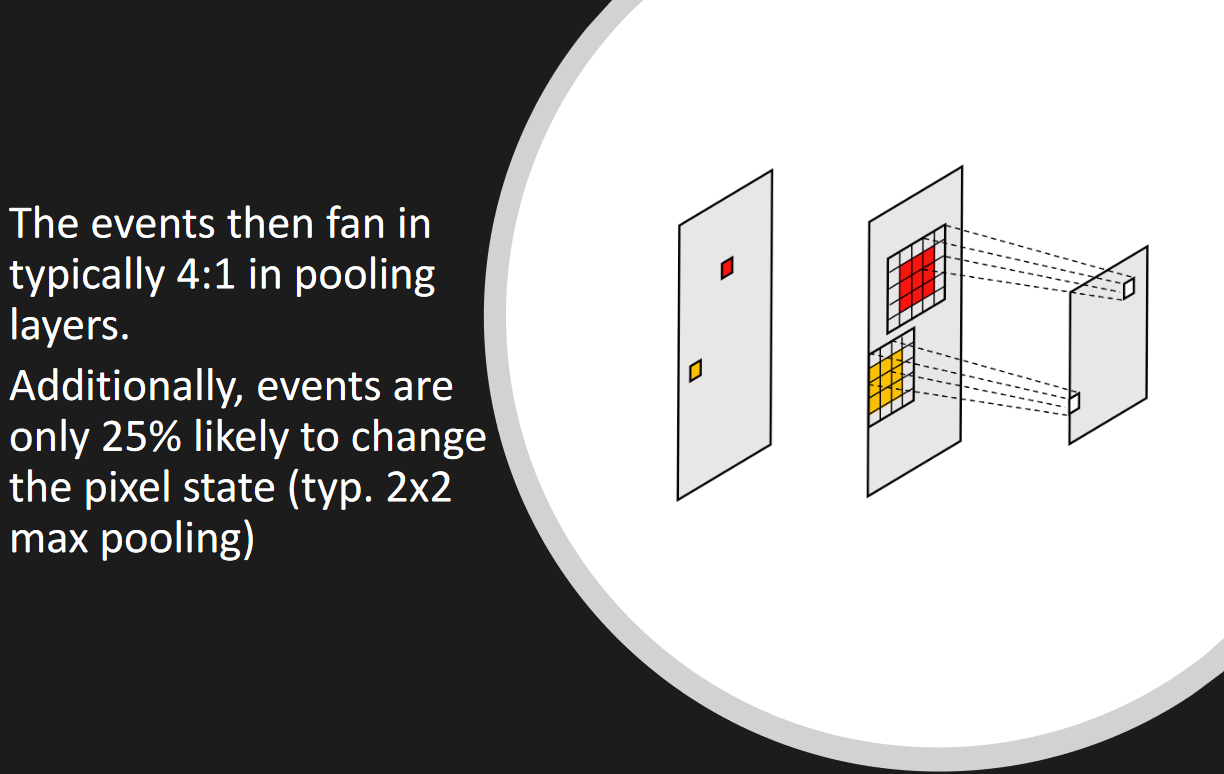

The events then fan in typically 4:1 in pooling layers. Additionally, events are only 25% likely to change the pixel state (typ. 2x2 max pooling)

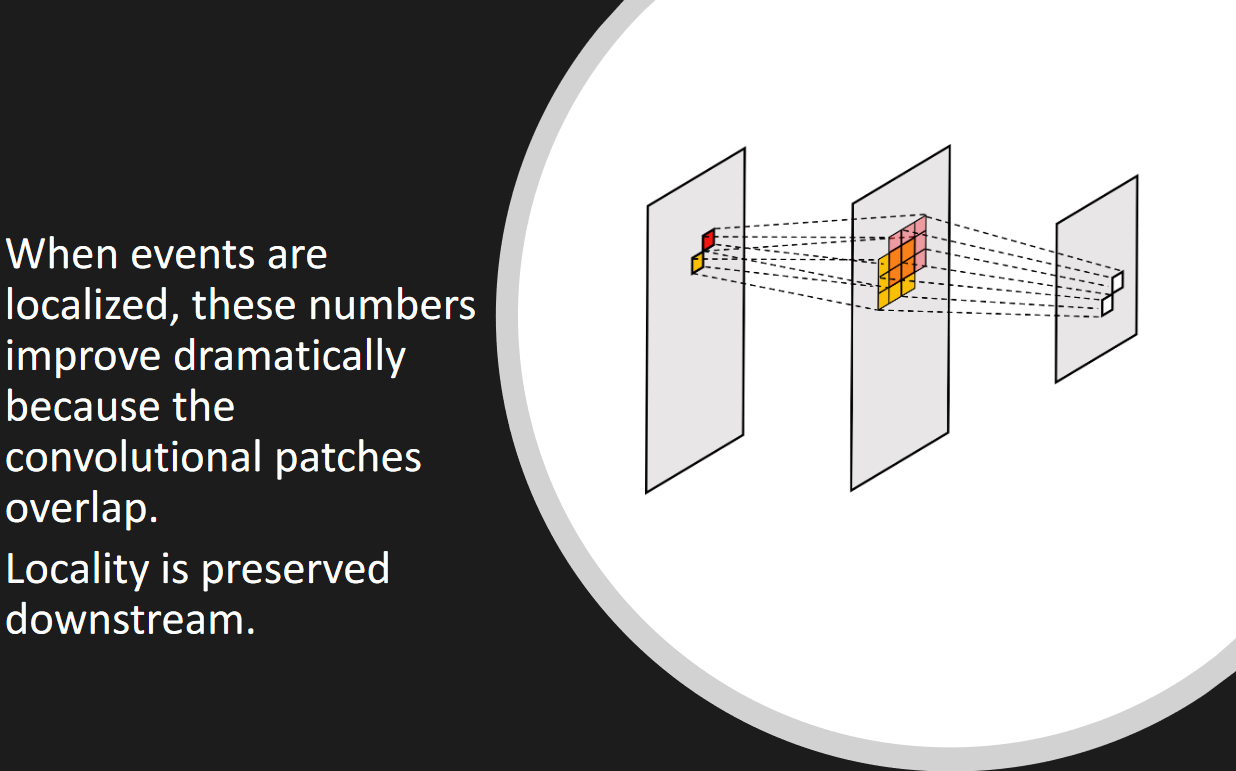

When events are localized, these numbers improve dramatically because the convolutional patches overlap.

Locality is preserved downstream.

Exploiting Sparsity in Compute Architectures

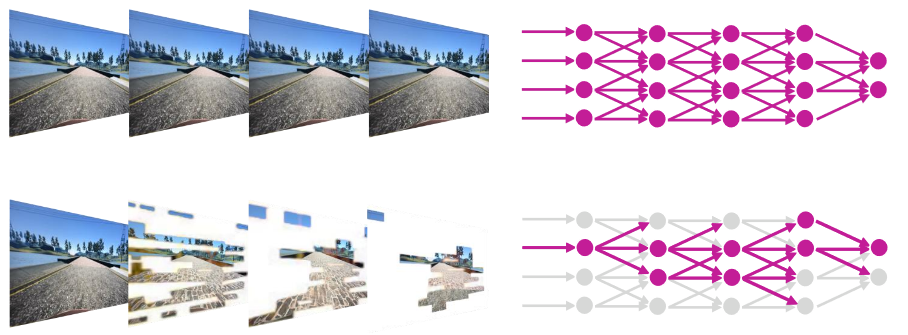

Each time, just the difference of the previous frame should be calculated, i.e., the corresponding neurons will be activated by the changes.